In the ever-evolving landscape of software development, striking the right balance between a robust architecture and avoiding the urges of over-engineering can often feel like walking a tightrope. While it is essential to build systems that can withstand the test of time and scale, overzealous design can lead to unnecessary complexity, often leading to increased maintenance and development costs. Never mind the potential steep learning curve for new development team members.

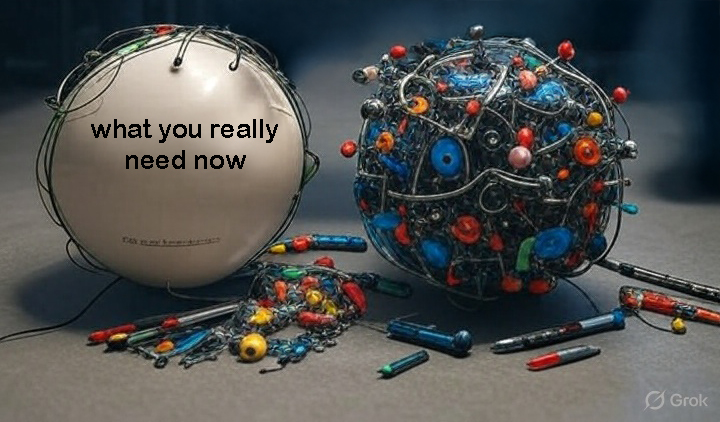

Over-engineering, simply put, is the act of creating a solution that is more complex than necessary to address the problem at hand. It's the equivalent of driving a Ferrari to get groceries every day - effective and certainly doable, but also overkill.

So, why does overengineering happen?

Typically, it stems from two places: business owners hanging onto buzzwords and that “best practices” article they skimmed and from engineers with an over eager desire for “future-proofing” and getting to “engineer” a solution. Non-technical business leaders might have done some Googling (or ChatGPT’d) or had conversations with other “non-technical” people and come to the conclusion that using an infinitely scalable software solution out of the box is the only and best option. On the other hand, in an effort to avoid the dreaded “technical debt", developers/engineers sometimes attempt to account for every conceivable future scenario. However, in doing so, they lose sight of the immediate goals and end up with a system that is overcomplicated and often inflexible. Solving “problems” that don’t exist is a waste of time and effort – wait until you can see the “problem” on the horizon or until it is truly a problem affecting business before addressing it.

Consider scalability. While it's crucial to design systems that can scale with increasing demands, it's equally important to avoid premature optimization. Overengineering for scalability can lead to unnecessary service layers (in a serverless/cloud-based architecture) and convoluted code, making it harder to adapt to the actual growth patterns of the application.

Moreover, overengineering can have profound implications on team dynamics. A codebase riddled with complexity becomes a breeding ground for confusion. Team members may find it challenging to understand the intricacies of the system, leading to slower development time and increased maintenance costs.

So, how can we navigate this delicate balance?

The key lies in adopting a pragmatic approach. Rather than attempting to predict the future, focus on the immediate requirements of the project. Embrace simplicity, modular design, and iterative development. By doing so, you not only reduce the likelihood of overengineering but also create a codebase that is more maintainable and adaptable to change.

Will an N-Tier architecture (gasp!) get your business to market faster and cheaper than a bleeding-edge serverless/cloud-native approach? In most early-stage cases — absolutely. N-Tier might be "legacy" by today’s standards, but when implemented thoughtfully, it’s predictable, maintainable, and scales just fine for the majority of use cases. You don’t need to impress your investors with Kubernetes diagrams on day one — you need paying customers and working software.

So yes, leverage cloud-managed services where it makes sense. But stop assuming you need to architect like you're running Facebook on launch day. Build what solves the business problem today — and earn the right to scale tomorrow.